[YouTube Lecture Summary] Andrej Karpathy - Deep Dive into LLMs like ChatGPT

Introduction

Pre-Training

Step 1: Download and preprocess the internet

Step 2: Tokenization

Step 3: Neural network training

Step 4: Inference

Base model

Post-Training: Supervised Finetuning

Conversations

Hallucinations

Knowledge of Self

Models need tokens to think

Things the model cannot do well

Post-Training: Reinforcement Learning

Reinforcement learning

DeepSeek-R1

AlphaGo

Reinforcement learning from human feedback (RLHF)

Preview of things to come

Keeping track of LLMs

Where to find LLMs

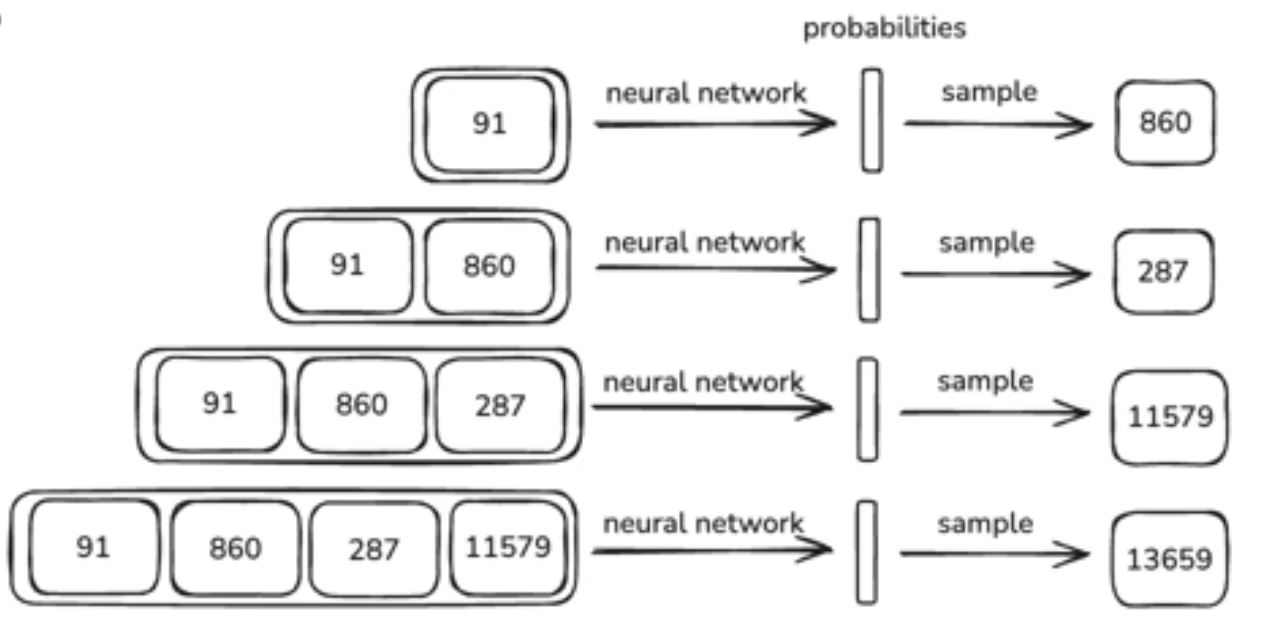

Step 4: Inference

Inference process: After training, the process of using the model to generate new text.

✅ Core principle: Given some text (prompt), it generates the most probable next tokens one by one to complete the sentence.

✅ Example

➡️ The sentence expands as you repeat it. ChatGPT naturally creates a conversation by continuing sentences based on the user's input (prompt).